Work With Keras MNIST Data Sets And Advanced Neural Networks

The MNIST data set is a large collection of handwritten digits that is often used to train and test machine learning models. The data set consists of 70,000 grayscale images of handwritten digits, with 60,000 images in the training set and 10,000 images in the test set.

To load the MNIST data set into Keras, we can use the following code:

python from keras.datasets import mnist

4.3 out of 5

| Language | : | English |

| File size | : | 49198 KB |

| Text-to-Speech | : | Enabled |

| Enhanced typesetting | : | Enabled |

| Print length | : | 785 pages |

| Screen Reader | : | Supported |

(x_train, y_train),(x_test, y_test) = mnist.load_data()

The x_train and y_train variables will contain the training data, while the x_test and y_test variables will contain the test data.

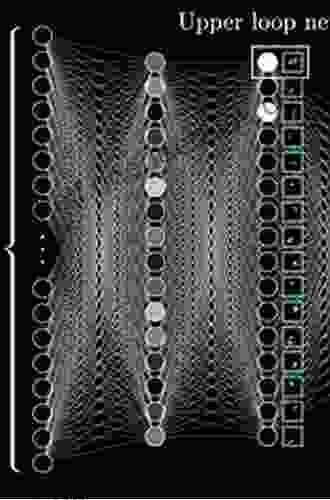

Once we have loaded the MNIST data set into Keras, we can create a simple neural network model to classify the digits in the data set. The following code creates a simple neural network model with two hidden layers:

python from keras.models import Sequential from keras.layers import Dense, Dropout

model = Sequential() model.add(Dense(512, activation='relu', input_shape=(784,))) model.add(Dropout(0.2)) model.add(Dense(512, activation='relu')) model.add(Dropout(0.2)) model.add(Dense(10, activation='softmax'))

The first layer in the model is a dense layer with 512 units and a relu activation function. The second layer is a dropout layer with a dropout rate of 0.2. The third layer is a dense layer with 512 units and a relu activation function. The fourth layer is a dropout layer with a dropout rate of 0.2. The fifth layer is a dense layer with 10 units and a softmax activation function.

Once we have created our neural network model, we can train it on the MNIST data set. The following code trains the model for 10 epochs with a batch size of 128:

python model.compile(loss='sparse_categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

model.fit(x_train, y_train, epochs=10, batch_size=128)

The compile() method compiles the model by specifying the loss function, the optimizer, and the metrics to be evaluated during training. The fit() method trains the model on the training data.

Once we have trained our model, we can evaluate its performance on the test data. The following code evaluates the model on the test data and prints the accuracy:

python loss, accuracy = model.evaluate(x_test, y_test) print('Accuracy:', accuracy)

The `evaluate()` method evaluates the model on the test data and returns the loss and accuracy. The `accuracy` variable will contain the accuracy of the model on the test data. <h2>Advanced Neural Network Architectures</h2> The simple neural network model that we created in this article is a good starting point for classifying the digits in the MNIST data set. However, there are a number of advanced neural network architectures that can be used to improve the performance of our model. Some of these architectures include: * **Convolutional neural networks (CNNs)**: CNNs are a type of neural network that is specifically designed for processing data that has a grid-like structure, such as images. CNNs have been shown to achieve state-of-the-art results on a wide range of image classification tasks. * **Recurrent neural networks (RNNs)**: RNNs are a type of neural network that is specifically designed for processing sequential data, such as text. RNNs have been shown to achieve state-of-the-art results on a wide range of natural language processing tasks. * **Generative adversarial networks (GANs)**: GANs are a type of neural network that is used to generate new data. GANs have been shown to be able to generate realistic images, text, and even music. These are just a few of the many advanced neural network architectures that are available. By using these architectures, we can create models that can achieve state-of-the-art results on a wide range of machine learning tasks. In this article, we have explored how to work with Keras MNIST data sets and advanced neural networks. We started by loading the MNIST data set into Keras, then we created a simple neural network model to classify the digits in the data set. Once we had created our model, we trained it on the MNIST data set and evaluated its performance. Finally, we discussed some advanced neural network architectures that can be used to improve the performance of our model. We encourage you to experiment with different neural network architectures and see how they perform on different machine learning tasks. With a little bit of creativity, you can create models that can achieve state-of-the-art results on a wide range of problems.</body></html>4.3 out of 5

| Language | : | English |

| File size | : | 49198 KB |

| Text-to-Speech | : | Enabled |

| Enhanced typesetting | : | Enabled |

| Print length | : | 785 pages |

| Screen Reader | : | Supported |

Do you want to contribute by writing guest posts on this blog?

Please contact us and send us a resume of previous articles that you have written.

Book

Book Page

Page Text

Text Story

Story Genre

Genre Library

Library Paperback

Paperback Paragraph

Paragraph Sentence

Sentence Bookmark

Bookmark Shelf

Shelf Glossary

Glossary Bibliography

Bibliography Foreword

Foreword Annotation

Annotation Footnote

Footnote Scroll

Scroll Codex

Codex Tome

Tome Library card

Library card Narrative

Narrative Autobiography

Autobiography Memoir

Memoir Dictionary

Dictionary Thesaurus

Thesaurus Narrator

Narrator Character

Character Librarian

Librarian Catalog

Catalog Card Catalog

Card Catalog Stacks

Stacks Periodicals

Periodicals Study

Study Lending

Lending Reserve

Reserve Reading Room

Reading Room Special Collections

Special Collections Reading List

Reading List Book Club

Book Club Theory

Theory Masood Rezvi

Masood Rezvi Michael Patterson

Michael Patterson Kate Sedley

Kate Sedley Marion Mcnealy

Marion Mcnealy Collyn Rivers

Collyn Rivers Elizabeth Graeber

Elizabeth Graeber Gill Paul

Gill Paul Henry H Hooyer

Henry H Hooyer C Larene Hall

C Larene Hall Anthony Poulton Smith

Anthony Poulton Smith Graham Fitch

Graham Fitch Sara King

Sara King Anna Seghers

Anna Seghers Pero Vaz De Caminha

Pero Vaz De Caminha Kevin C Chung

Kevin C Chung David Archuleta

David Archuleta Will Schmid

Will Schmid Samir Amin

Samir Amin Kelley Camden

Kelley Camden J K Winn

J K Winn

Light bulbAdvertise smarter! Our strategic ad space ensures maximum exposure. Reserve your spot today!

W. Somerset MaughamThe Trump Presidency, Journalism, and Democracy: Routledge Research in...

W. Somerset MaughamThe Trump Presidency, Journalism, and Democracy: Routledge Research in...

Thomas HardyEnchanted Passage: A Civil War Time Travel Adventure in Sutton, Massachusetts

Thomas HardyEnchanted Passage: A Civil War Time Travel Adventure in Sutton, Massachusetts Jim CoxFollow ·19.8k

Jim CoxFollow ·19.8k Henry David ThoreauFollow ·14k

Henry David ThoreauFollow ·14k Gordon CoxFollow ·15.8k

Gordon CoxFollow ·15.8k Liam WardFollow ·8k

Liam WardFollow ·8k Pablo NerudaFollow ·14.2k

Pablo NerudaFollow ·14.2k Dylan MitchellFollow ·5.7k

Dylan MitchellFollow ·5.7k Hugh BellFollow ·3.8k

Hugh BellFollow ·3.8k David BaldacciFollow ·2.1k

David BaldacciFollow ·2.1k

Beau Carter

Beau CarterLater Political Writings: A Window into the Evolution of...

Political thought, like...

Tyrone Powell

Tyrone PowellThe Essential Guide to Family School Partnerships:...

: The Importance of...

Christian Barnes

Christian BarnesAdvancing Folkloristics: Conversations with Jesse...

Dr. Jesse Fivecoate is an...

Jake Carter

Jake CarterHal Leonard DJ Method Connell Barrett: A Comprehensive...

Are you ready...

John Updike

John UpdikeCondensed Review of Pediatric Anesthesiology Second...

Condensed Review of...

Guillermo Blair

Guillermo BlairExploring the Complexities of Motherhood and Identity: A...

Elena Ferrante's "The Lost...

4.3 out of 5

| Language | : | English |

| File size | : | 49198 KB |

| Text-to-Speech | : | Enabled |

| Enhanced typesetting | : | Enabled |

| Print length | : | 785 pages |

| Screen Reader | : | Supported |